Bitcoin Core integration/staging tree

https://bitcoincore.org

For an immediately usable, binary version of the Bitcoin Core software, see https://bitcoincore.org/en/download/.

What is Bitcoin Core?

Bitcoin Core connects to the Bitcoin peer-to-peer network to download and fully validate blocks and transactions. It also includes a wallet and graphical user interface, which can be optionally built.

Further information about Bitcoin Core is available in the doc folder.

License

Bitcoin Core is released under the terms of the MIT license. See COPYING for more information or see https://opensource.org/licenses/MIT.

Development Process

The master branch is regularly built (see doc/build-*.md for instructions) and tested, but it is not guaranteed to be

completely stable. Tags are created

regularly from release branches to indicate new official, stable release versions of Bitcoin Core.

The https://github.com/bitcoin-core/gui repository is used exclusively for the development of the GUI. Its master branch is identical in all monotree repositories. Release branches and tags do not exist, so please do not fork that repository unless it is for development reasons.

The contribution workflow is described in CONTRIBUTING.md and useful hints for developers can be found in doc/developer-notes.md.

Testing

Testing and code review is the bottleneck for development; we get more pull requests than we can review and test on short notice. Please be patient and help out by testing other people's pull requests, and remember this is a security-critical project where any mistake might cost people lots of money.

Automated Testing

Developers are strongly encouraged to write unit tests for new code, and to

submit new unit tests for old code. Unit tests can be compiled and run

(assuming they weren't disabled during the generation of the build system) with: ctest. Further details on running

and extending unit tests can be found in /src/test/README.md.

There are also regression and integration tests, written

in Python.

These tests can be run (if the test dependencies are installed) with: build/test/functional/test_runner.py

(assuming build is your build directory).

The CI (Continuous Integration) systems make sure that every pull request is built for Windows, Linux, and macOS, and that unit/sanity tests are run automatically.

Manual Quality Assurance (QA) Testing

Changes should be tested by somebody other than the developer who wrote the code. This is especially important for large or high-risk changes. It is useful to add a test plan to the pull request description if testing the changes is not straightforward.

Translations

Changes to translations as well as new translations can be submitted to Bitcoin Core's Transifex page.

Translations are periodically pulled from Transifex and merged into the git repository. See the translation process for details on how this works.

Important: We do not accept translation changes as GitHub pull requests because the next pull from Transifex would automatically overwrite them again.

Contributing to Bitcoin Core

The Bitcoin Core project operates an open contributor model where anyone is welcome to contribute towards development in the form of peer review, testing and patches. This document explains the practical process and guidelines for contributing.

First, in terms of structure, there is no particular concept of "Bitcoin Core developers" in the sense of privileged people. Open source often naturally revolves around a meritocracy where contributors earn trust from the developer community over time. Nevertheless, some hierarchy is necessary for practical purposes. As such, there are repository maintainers who are responsible for merging pull requests, the release cycle, and moderation.

Getting Started

New contributors are very welcome and needed.

Reviewing and testing is highly valued and the most effective way you can contribute as a new contributor. It also will teach you much more about the code and process than opening pull requests. Please refer to the peer review section below.

Before you start contributing, familiarize yourself with the Bitcoin Core build system and tests. Refer to the documentation in the repository on how to build Bitcoin Core and how to run the unit tests, functional tests, and fuzz tests.

There are many open issues of varying difficulty waiting to be fixed. If you're looking for somewhere to start contributing, check out the good first issue list or changes that are up for grabs. Some of them might no longer be applicable. So if you are interested, but unsure, you might want to leave a comment on the issue first.

You may also participate in the weekly Bitcoin Core PR Review Club meeting.

Good First Issue Label

The purpose of the good first issue label is to highlight which issues are

suitable for a new contributor without a deep understanding of the codebase.

However, good first issues can be solved by anyone. If they remain unsolved for a longer time, a frequent contributor might address them.

You do not need to request permission to start working on an issue. However, you are encouraged to leave a comment if you are planning to work on it. This will help other contributors monitor which issues are actively being addressed and is also an effective way to request assistance if and when you need it.

Communication Channels

Most communication about Bitcoin Core development happens on IRC, in the

#bitcoin-core-dev channel on Libera Chat. The easiest way to participate on IRC is

with the web client, web.libera.chat. Chat

history logs can be found

on https://www.erisian.com.au/bitcoin-core-dev/

and https://gnusha.org/bitcoin-core-dev/.

Discussion about codebase improvements happens in GitHub issues and pull requests.

The developer mailing list should be used to discuss complicated or controversial consensus or P2P protocol changes before working on a patch set. Archives can be found on https://gnusha.org/pi/bitcoindev/.

Contributor Workflow

The codebase is maintained using the "contributor workflow" where everyone without exception contributes patch proposals using "pull requests" (PRs). This facilitates social contribution, easy testing and peer review.

To contribute a patch, the workflow is as follows:

- Fork repository (only for the first time)

- Create topic branch

- Commit patches

For GUI-related issues or pull requests, the https://github.com/bitcoin-core/gui repository should be used. For all other issues and pull requests, the https://github.com/bitcoin/bitcoin node repository should be used.

The master branch for all monotree repositories is identical.

As a rule of thumb, everything that only modifies src/qt is a GUI-only pull

request. However:

- For global refactoring or other transversal changes the node repository should be used.

- For GUI-related build system changes, the node repository should be used because the change needs review by the build systems reviewers.

- Changes in

src/interfacesneed to go to the node repository because they might affect other components like the wallet.

For large GUI changes that include build system and interface changes, it is recommended to first open a pull request against the GUI repository. When there is agreement to proceed with the changes, a pull request with the build system and interfaces changes can be submitted to the node repository.

The project coding conventions in the developer notes must be followed.

Committing Patches

In general, commits should be atomic and diffs should be easy to read. For this reason, do not mix any formatting fixes or code moves with actual code changes.

Make sure each individual commit is hygienic: that it builds successfully on its own without warnings, errors, regressions, or test failures.

Commit messages should be verbose by default consisting of a short subject line (50 chars max), a blank line and detailed explanatory text as separate paragraph(s), unless the title alone is self-explanatory (like "Correct typo in init.cpp") in which case a single title line is sufficient. Commit messages should be helpful to people reading your code in the future, so explain the reasoning for your decisions. Further explanation here.

If a particular commit references another issue, please add the reference. For

example: refs #1234 or fixes #4321. Using the fixes or closes keywords

will cause the corresponding issue to be closed when the pull request is merged.

Commit messages should never contain any @ mentions (usernames prefixed with "@").

Please refer to the Git manual for more information about Git.

- Push changes to your fork

- Create pull request

Creating the Pull Request

The title of the pull request should be prefixed by the component or area that the pull request affects. Valid areas as:

consensusfor changes to consensus critical codedocfor changes to the documentationqtorguifor changes to bitcoin-qtlogfor changes to log messagesminingfor changes to the mining codenetorp2pfor changes to the peer-to-peer network coderefactorfor structural changes that do not change behaviorrpc,restorzmqfor changes to the RPC, REST or ZMQ APIscontriborclifor changes to the scripts and toolstest,qaorcifor changes to the unit tests, QA tests or CI codeutilorlibfor changes to the utils or librarieswalletfor changes to the wallet codebuildfor changes to CMakeguixfor changes to the GUIX reproducible builds

Examples:

consensus: Add new opcode for BIP-XXXX OP_CHECKAWESOMESIG

net: Automatically create onion service, listen on Tor

qt: Add feed bump button

log: Fix typo in log message

The body of the pull request should contain sufficient description of what the patch does, and even more importantly, why, with justification and reasoning. You should include references to any discussions (for example, other issues or mailing list discussions).

The description for a new pull request should not contain any @ mentions. The

PR description will be included in the commit message when the PR is merged and

any users mentioned in the description will be annoyingly notified each time a

fork of Bitcoin Core copies the merge. Instead, make any username mentions in a

subsequent comment to the PR.

Translation changes

Note that translations should not be submitted as pull requests. Please see Translation Process for more information on helping with translations.

Work in Progress Changes and Requests for Comments

If a pull request is not to be considered for merging (yet), please prefix the title with [WIP] or use Tasks Lists in the body of the pull request to indicate tasks are pending.

Address Feedback

At this stage, one should expect comments and review from other contributors. You can add more commits to your pull request by committing them locally and pushing to your fork.

You are expected to reply to any review comments before your pull request is merged. You may update the code or reject the feedback if you do not agree with it, but you should express so in a reply. If there is outstanding feedback and you are not actively working on it, your pull request may be closed.

Please refer to the peer review section below for more details.

Squashing Commits

If your pull request contains fixup commits (commits that change the same line of code repeatedly) or too fine-grained commits, you may be asked to squash your commits before it will be reviewed. The basic squashing workflow is shown below.

git checkout your_branch_name

git rebase -i HEAD~n

# n is normally the number of commits in the pull request.

# Set commits (except the one in the first line) from 'pick' to 'squash', save and quit.

# On the next screen, edit/refine commit messages.

# Save and quit.

git push -f # (force push to GitHub)

Please update the resulting commit message, if needed. It should read as a coherent message. In most cases, this means not just listing the interim commits.

If your change contains a merge commit, the above workflow may not work and you will need to remove the merge commit first. See the next section for details on how to rebase.

Please refrain from creating several pull requests for the same change. Use the pull request that is already open (or was created earlier) to amend changes. This preserves the discussion and review that happened earlier for the respective change set.

The length of time required for peer review is unpredictable and will vary from pull request to pull request.

Rebasing Changes

When a pull request conflicts with the target branch, you may be asked to rebase it on top of the current target branch.

git fetch https://github.com/bitcoin/bitcoin # Fetch the latest upstream commit

git rebase FETCH_HEAD # Rebuild commits on top of the new base

This project aims to have a clean git history, where code changes are only made in non-merge commits. This simplifies auditability because merge commits can be assumed to not contain arbitrary code changes. Merge commits should be signed, and the resulting git tree hash must be deterministic and reproducible. The script in /contrib/verify-commits checks that.

After a rebase, reviewers are encouraged to sign off on the force push. This should be relatively straightforward with

the git range-diff tool explained in the productivity

notes. To avoid needless review churn, maintainers will

generally merge pull requests that received the most review attention first.

Pull Request Philosophy

Patchsets should always be focused. For example, a pull request could add a feature, fix a bug, or refactor code; but not a mixture. Please also avoid super pull requests which attempt to do too much, are overly large, or overly complex as this makes review difficult.

Features

When adding a new feature, thought must be given to the long term technical debt and maintenance that feature may require after inclusion. Before proposing a new feature that will require maintenance, please consider if you are willing to maintain it (including bug fixing). If features get orphaned with no maintainer in the future, they may be removed by the Repository Maintainer.

Refactoring

Refactoring is a necessary part of any software project's evolution. The following guidelines cover refactoring pull requests for the project.

There are three categories of refactoring: code-only moves, code style fixes, and code refactoring. In general, refactoring pull requests should not mix these three kinds of activities in order to make refactoring pull requests easy to review and uncontroversial. In all cases, refactoring PRs must not change the behaviour of code within the pull request (bugs must be preserved as is).

Project maintainers aim for a quick turnaround on refactoring pull requests, so where possible keep them short, uncomplex and easy to verify.

Pull requests that refactor the code should not be made by new contributors. It requires a certain level of experience to know where the code belongs to and to understand the full ramification (including rebase effort of open pull requests).

Trivial pull requests or pull requests that refactor the code with no clear benefits may be immediately closed by the maintainers to reduce unnecessary workload on reviewing.

"Decision Making" Process

The following applies to code changes to the Bitcoin Core project (and related projects such as libsecp256k1), and is not to be confused with overall Bitcoin Network Protocol consensus changes.

Whether a pull request is merged into Bitcoin Core rests with the project merge maintainers.

Maintainers will take into consideration if a patch is in line with the general principles of the project; meets the minimum standards for inclusion; and will judge the general consensus of contributors.

In general, all pull requests must:

- Have a clear use case, fix a demonstrable bug or serve the greater good of the project (for example refactoring for modularisation);

- Be well peer-reviewed;

- Have unit tests, functional tests, and fuzz tests, where appropriate;

- Follow code style guidelines (C++, functional tests);

- Not break the existing test suite;

- Where bugs are fixed, where possible, there should be unit tests demonstrating the bug and also proving the fix. This helps prevent regression.

- Change relevant comments and documentation when behaviour of code changes.

Patches that change Bitcoin consensus rules are considerably more involved than normal because they affect the entire ecosystem and so must be preceded by extensive mailing list discussions and have a numbered BIP. While each case will be different, one should be prepared to expend more time and effort than for other kinds of patches because of increased peer review and consensus building requirements.

Peer Review

Anyone may participate in peer review which is expressed by comments in the pull request. Typically reviewers will review the code for obvious errors, as well as test out the patch set and opine on the technical merits of the patch. Project maintainers take into account the peer review when determining if there is consensus to merge a pull request (remember that discussions may have been spread out over GitHub, mailing list and IRC discussions).

Code review is a burdensome but important part of the development process, and as such, certain types of pull requests are rejected. In general, if the improvements do not warrant the review effort required, the PR has a high chance of being rejected. It is up to the PR author to convince the reviewers that the changes warrant the review effort, and if reviewers are "Concept NACK'ing" the PR, the author may need to present arguments and/or do research backing their suggested changes.

Conceptual Review

A review can be a conceptual review, where the reviewer leaves a comment

Concept (N)ACK, meaning "I do (not) agree with the general goal of this pull request",Approach (N)ACK, meaningConcept ACK, but "I do (not) agree with the approach of this change".

A NACK needs to include a rationale why the change is not worthwhile.

NACKs without accompanying reasoning may be disregarded.

Code Review

After conceptual agreement on the change, code review can be provided. A review

begins with ACK BRANCH_COMMIT, where BRANCH_COMMIT is the top of the PR

branch, followed by a description of how the reviewer did the review. The

following language is used within pull request comments:

- "I have tested the code", involving change-specific manual testing in addition to running the unit, functional, or fuzz tests, and in case it is not obvious how the manual testing was done, it should be described;

- "I have not tested the code, but I have reviewed it and it looks OK, I agree it can be merged";

- A "nit" refers to a trivial, often non-blocking issue.

Project maintainers reserve the right to weigh the opinions of peer reviewers using common sense judgement and may also weigh based on merit. Reviewers that have demonstrated a deeper commitment and understanding of the project over time or who have clear domain expertise may naturally have more weight, as one would expect in all walks of life.

Where a patch set affects consensus-critical code, the bar will be much higher in terms of discussion and peer review requirements, keeping in mind that mistakes could be very costly to the wider community. This includes refactoring of consensus-critical code.

Where a patch set proposes to change the Bitcoin consensus, it must have been discussed extensively on the mailing list and IRC, be accompanied by a widely discussed BIP and have a generally widely perceived technical consensus of being a worthwhile change based on the judgement of the maintainers.

Finding Reviewers

As most reviewers are themselves developers with their own projects, the review process can be quite lengthy, and some amount of patience is required. If you find that you've been waiting for a pull request to be given attention for several months, there may be a number of reasons for this, some of which you can do something about:

- It may be because of a feature freeze due to an upcoming release. During this time, only bug fixes are taken into consideration. If your pull request is a new feature, it will not be prioritized until after the release. Wait for the release.

- It may be because the changes you are suggesting do not appeal to people. Rather than nits and critique, which require effort and means they care enough to spend time on your contribution, thundering silence is a good sign of widespread (mild) dislike of a given change (because people don't assume others won't actually like the proposal). Don't take that personally, though! Instead, take another critical look at what you are suggesting and see if it: changes too much, is too broad, doesn't adhere to the developer notes, is dangerous or insecure, is messily written, etc. Identify and address any of the issues you find. Then ask e.g. on IRC if someone could give their opinion on the concept itself.

- It may be because your code is too complex for all but a few people, and those people may not have realized your pull request even exists. A great way to find people who are qualified and care about the code you are touching is the Git Blame feature. Simply look up who last modified the code you are changing and see if you can find them and give them a nudge. Don't be incessant about the nudging, though.

- Finally, if all else fails, ask on IRC or elsewhere for someone to give your pull request a look. If you think you've been waiting for an unreasonably long time (say, more than a month) for no particular reason (a few lines changed, etc.), this is totally fine. Try to return the favor when someone else is asking for feedback on their code, and the universe balances out.

- Remember that the best thing you can do while waiting is give review to others!

Backporting

Security and bug fixes can be backported from master to release

branches.

Maintainers will do backports in batches and

use the proper Needs backport (...) labels

when needed (the original author does not need to worry about it).

A backport should contain the following metadata in the commit body:

Github-Pull: #<PR number>

Rebased-From: <commit hash of the original commit>

Have a look at an example backport PR.

Also see the backport.py script.

Copyright

By contributing to this repository, you agree to license your work under the

MIT license unless specified otherwise in contrib/debian/copyright or at

the top of the file itself. Any work contributed where you are not the original

author must contain its license header with the original author(s) and source.

See doc/build-*.md

Security Policy

Supported Versions

See our website for versions of Bitcoin Core that are currently supported with security updates: https://bitcoincore.org/en/lifecycle/#schedule

Reporting a Vulnerability

To report security issues send an email to security@bitcoincore.org (not for support).

The following keys may be used to communicate sensitive information to developers:

| Name | Fingerprint |

|---|---|

| Pieter Wuille | 133E AC17 9436 F14A 5CF1 B794 860F EB80 4E66 9320 |

| Michael Ford | E777 299F C265 DD04 7930 70EB 944D 35F9 AC3D B76A |

| Ava Chow | 1528 1230 0785 C964 44D3 334D 1756 5732 E08E 5E41 |

You can import a key by running the following command with that individual’s fingerprint: gpg --keyserver hkps://keys.openpgp.org --recv-keys "<fingerprint>" Ensure that you put quotes around fingerprints containing spaces.

CI Scripts

This directory contains scripts for each build step in each build stage.

Running a Stage Locally

Be aware that the tests will be built and run in-place, so please run at your own risk. If the repository is not a fresh git clone, you might have to clean files from previous builds or test runs first.

The ci needs to perform various sysadmin tasks such as installing packages or writing to the user's home directory. While it should be fine to run the ci system locally on you development box, the ci scripts can generally be assumed to have received less review and testing compared to other parts of the codebase. If you want to keep the work tree clean, you might want to run the ci system in a virtual machine with a Linux operating system of your choice.

To allow for a wide range of tested environments, but also ensure reproducibility to some extent, the test stage

requires bash, docker, and python3 to be installed. To run on different architectures than the host qemu is also required. To install all requirements on Ubuntu, run

sudo apt install bash docker.io python3 qemu-user-static

It is recommended to run the ci system in a clean env. To run the test stage with a specific configuration,

env -i HOME="$HOME" PATH="$PATH" USER="$USER" bash -c 'FILE_ENV="./ci/test/00_setup_env_arm.sh" ./ci/test_run_all.sh'

Configurations

The test files (FILE_ENV) are constructed to test a wide range of

configurations, rather than a single pass/fail. This helps to catch build

failures and logic errors that present on platforms other than the ones the

author has tested.

Some builders use the dependency-generator in ./depends, rather than using

the system package manager to install build dependencies. This guarantees that

the tester is using the same versions as the release builds, which also use

./depends.

It is also possible to force a specific configuration without modifying the file. For example,

env -i HOME="$HOME" PATH="$PATH" USER="$USER" bash -c 'MAKEJOBS="-j1" FILE_ENV="./ci/test/00_setup_env_arm.sh" ./ci/test_run_all.sh'

The files starting with 0n (n greater than 0) are the scripts that are run

in order.

Cache

In order to avoid rebuilding all dependencies for each build, the binaries are cached and reused when possible. Changes in the dependency-generator will trigger cache-invalidation and rebuilds as necessary.

retry - The command line retry tool

Retry any shell command with exponential backoff or constant delay.

Instructions

Install:

retry is a shell script, so drop it somewhere and make sure it's added to your $PATH. Or you can use the following one-liner:

sudo sh -c "curl https://raw.githubusercontent.com/kadwanev/retry/master/retry -o /usr/local/bin/retry && chmod +x /usr/local/bin/retry"

If you're on OS X, retry is also on Homebrew:

brew pull 27283

brew install retry

Not popular enough for homebrew-core. Please star this project to help.

Usage

Help:

retry -?

Usage: retry [options] -- execute command

-h, -?, --help

-v, --verbose Verbose output

-t, --tries=# Set max retries: Default 10

-s, --sleep=secs Constant sleep amount (seconds)

-m, --min=secs Exponential Backoff: minimum sleep amount (seconds): Default 0.3

-x, --max=secs Exponential Backoff: maximum sleep amount (seconds): Default 60

-f, --fail="script +cmds" Fail Script: run in case of final failure

Examples

No problem:

retry echo u work good

u work good

Test functionality:

retry 'echo "y u no work"; false'

y u no work

Before retry #1: sleeping 0.3 seconds

y u no work

Before retry #2: sleeping 0.6 seconds

y u no work

Before retry #3: sleeping 1.2 seconds

y u no work

Before retry #4: sleeping 2.4 seconds

y u no work

Before retry #5: sleeping 4.8 seconds

y u no work

Before retry #6: sleeping 9.6 seconds

y u no work

Before retry #7: sleeping 19.2 seconds

y u no work

Before retry #8: sleeping 38.4 seconds

y u no work

Before retry #9: sleeping 60.0 seconds

y u no work

Before retry #10: sleeping 60.0 seconds

y u no work

etc..

Limit retries:

retry -t 4 'echo "y u no work"; false'

y u no work

Before retry #1: sleeping 0.3 seconds

y u no work

Before retry #2: sleeping 0.6 seconds

y u no work

Before retry #3: sleeping 1.2 seconds

y u no work

Before retry #4: sleeping 2.4 seconds

y u no work

Retries exhausted

Bad command:

retry poop

bash: poop: command not found

Fail command:

retry -t 3 -f 'echo "oh poopsickles"' 'echo "y u no work"; false'

y u no work

Before retry #1: sleeping 0.3 seconds

y u no work

Before retry #2: sleeping 0.6 seconds

y u no work

Before retry #3: sleeping 1.2 seconds

y u no work

Retries exhausted, running fail script

oh poopsickles

Last attempt passed:

retry -t 3 -- 'if [ $RETRY_ATTEMPT -eq 3 ]; then echo Passed at attempt $RETRY_ATTEMPT; true; else echo Failed at attempt $RETRY_ATTEMPT; false; fi;'

Failed at attempt 0

Before retry #1: sleeping 0.3 seconds

Failed at attempt 1

Before retry #2: sleeping 0.6 seconds

Failed at attempt 2

Before retry #3: sleeping 1.2 seconds

Passed at attempt 3

License

Apache 2.0 - go nuts

Repository Tools

Developer tools

Specific tools for developers working on this repository.

Additional tools, including the github-merge.py script, are available in the maintainer-tools repository.

Verify-Commits

Tool to verify that every merge commit was signed by a developer using the github-merge.py script.

Linearize

Construct a linear, no-fork, best version of the blockchain.

Qos

A Linux bash script that will set up traffic control (tc) to limit the outgoing bandwidth for connections to the Bitcoin network. This means one can have an always-on bitcoind instance running, and another local bitcoind/bitcoin-qt instance which connects to this node and receives blocks from it.

Seeds

Utility to generate the pnSeed[] array that is compiled into the client.

Build Tools and Keys

Packaging

The Debian subfolder contains the copyright file.

All other packaging related files can be found in the bitcoin-core/packaging repository.

MacDeploy

Scripts and notes for Mac builds.

Test and Verify Tools

TestGen

Utilities to generate test vectors for the data-driven Bitcoin tests.

Verify-Binaries

This script attempts to download and verify the signature file SHA256SUMS.asc from bitcoin.org.

Command Line Tools

Completions

Shell completions for bash and fish.

ASMap Tool

Tool for performing various operations on textual and binary asmap files,

particularly encoding/compressing the raw data to the binary format that can

be used in Bitcoin Core with the -asmap option.

Example usage:

python3 asmap-tool.py encode /path/to/input.file /path/to/output.file

python3 asmap-tool.py decode /path/to/input.file /path/to/output.file

python3 asmap-tool.py diff /path/to/first.file /path/to/second.file

Contents

This directory contains tools for developers working on this repository.

clang-format-diff.py

A script to format unified git diffs according to .clang-format.

Requires clang-format, installed e.g. via brew install clang-format on macOS,

or sudo apt install clang-format on Debian/Ubuntu.

For instance, to format the last commit with 0 lines of context, the script should be called from the git root folder as follows.

git diff -U0 HEAD~1.. | ./contrib/devtools/clang-format-diff.py -p1 -i -v

copyright_header.py

Provides utilities for managing copyright headers of The Bitcoin Core developers in repository source files. It has three subcommands:

$ ./copyright_header.py report <base_directory> [verbose]

$ ./copyright_header.py update <base_directory>

$ ./copyright_header.py insert <file>

Running these subcommands without arguments displays a usage string.

copyright_header.py report <base_directory> [verbose]

Produces a report of all copyright header notices found inside the source files

of a repository. Useful to quickly visualize the state of the headers.

Specifying verbose will list the full filenames of files of each category.

copyright_header.py update <base_directory> [verbose]

Updates all the copyright headers of The Bitcoin Core developers which were

changed in a year more recent than is listed. For example:

// Copyright (c) <firstYear>-<lastYear> The Bitcoin Core developers

will be updated to:

// Copyright (c) <firstYear>-<lastModifiedYear> The Bitcoin Core developers

where <lastModifiedYear> is obtained from the git log history.

This subcommand also handles copyright headers that have only a single year. In those cases:

// Copyright (c) <year> The Bitcoin Core developers

will be updated to:

// Copyright (c) <year>-<lastModifiedYear> The Bitcoin Core developers

where the update is appropriate.

copyright_header.py insert <file>

Inserts a copyright header for The Bitcoin Core developers at the top of the

file in either Python or C++ style as determined by the file extension. If the

file is a Python file and it has #! starting the first line, the header is

inserted in the line below it.

The copyright dates will be set to be <year_introduced>-<current_year> where

<year_introduced> is according to the git log history. If

<year_introduced> is equal to <current_year>, it will be set as a single

year rather than two hyphenated years.

If the file already has a copyright for The Bitcoin Core developers, the

script will exit.

gen-manpages.py

A small script to automatically create manpages in ../../doc/man by running the release binaries with the -help option. This requires help2man which can be found at: https://www.gnu.org/software/help2man/

With in-tree builds this tool can be run from any directory within the

repository. To use this tool with out-of-tree builds set BUILDDIR. For

example:

BUILDDIR=$PWD/build contrib/devtools/gen-manpages.py

headerssync-params.py

A script to generate optimal parameters for the headerssync module (src/headerssync.cpp). It takes no command-line options, as all its configuration is set at the top of the file. It runs many times faster inside PyPy. Invocation:

pypy3 contrib/devtools/headerssync-params.py

gen-bitcoin-conf.sh

Generates a bitcoin.conf file in share/examples/ by parsing the output from bitcoind --help. This script is run during the

release process to include a bitcoin.conf with the release binaries and can also be run by users to generate a file locally.

When generating a file as part of the release process, make sure to commit the changes after running the script.

With in-tree builds this tool can be run from any directory within the

repository. To use this tool with out-of-tree builds set BUILDDIR. For

example:

BUILDDIR=$PWD/build contrib/devtools/gen-bitcoin-conf.sh

security-check.py and test-security-check.py

Perform basic security checks on a series of executables.

symbol-check.py

A script to check that release executables only contain certain symbols and are only linked against allowed libraries.

For Linux this means checking for allowed gcc, glibc and libstdc++ version symbols. This makes sure they are still compatible with the minimum supported distribution versions.

For macOS and Windows we check that the executables are only linked against libraries we allow.

Example usage:

find ../path/to/executables -type f -executable | xargs python3 contrib/devtools/symbol-check.py

If no errors occur the return value will be 0 and the output will be empty.

If there are any errors the return value will be 1 and output like this will be printed:

.../64/test_bitcoin: symbol memcpy from unsupported version GLIBC_2.14

.../64/test_bitcoin: symbol __fdelt_chk from unsupported version GLIBC_2.15

.../64/test_bitcoin: symbol std::out_of_range::~out_of_range() from unsupported version GLIBCXX_3.4.15

.../64/test_bitcoin: symbol _ZNSt8__detail15_List_nod from unsupported version GLIBCXX_3.4.15

circular-dependencies.py

Run this script from the root of the source tree (src/) to find circular dependencies in the source code.

This looks only at which files include other files, treating the .cpp and .h file as one unit.

Example usage:

cd .../src

../contrib/devtools/circular-dependencies.py {*,*/*,*/*/*}.{h,cpp}

Bitcoin Tidy

Example Usage:

cmake -S . -B build -DLLVM_DIR=$(llvm-config --cmakedir) -DCMAKE_BUILD_TYPE=Release

cmake --build build -j$(nproc)

cmake --build build --target bitcoin-tidy-tests -j$(nproc)

Bootstrappable Bitcoin Core Builds

This directory contains the files necessary to perform bootstrappable Bitcoin Core builds.

Bootstrappability furthers our binary security guarantees by allowing us to audit and reproduce our toolchain instead of blindly trusting binary downloads.

We achieve bootstrappability by using Guix as a functional package manager.

Requirements

Conservatively, you will need:

- 16GB of free disk space on the partition that /gnu/store will reside in

- 8GB of free disk space per platform triple you're planning on building

(see the

HOSTSenvironment variable description)

Installation and Setup

If you don't have Guix installed and set up, please follow the instructions in INSTALL.md

Usage

If you haven't considered your security model yet, please read the relevant section before proceeding to perform a build.

Making the Xcode SDK available for macOS cross-compilation

In order to perform a build for macOS (which is included in the default set of

platform triples to build), you'll need to extract the macOS SDK tarball using

tools found in the macdeploy directory.

You can then either point to the SDK using the SDK_PATH environment variable:

# Extract the SDK tarball to /path/to/parent/dir/of/extracted/SDK/Xcode-<foo>-<bar>-extracted-SDK-with-libcxx-headers

tar -C /path/to/parent/dir/of/extracted/SDK -xaf /path/to/Xcode-<foo>-<bar>-extracted-SDK-with-libcxx-headers.tar.gz

# Indicate where to locate the SDK tarball

export SDK_PATH=/path/to/parent/dir/of/extracted/SDK

or extract it into depends/SDKs:

mkdir -p depends/SDKs

tar -C depends/SDKs -xaf /path/to/SDK/tarball

Building

The author highly recommends at least reading over the common usage patterns and examples section below before starting a build. For a full list of customization options, see the recognized environment variables section.

To build Bitcoin Core reproducibly with all default options, invoke the following from the top of a clean repository:

./contrib/guix/guix-build

Codesigning build outputs

The guix-codesign command attaches codesignatures (produced by codesigners) to

existing non-codesigned outputs. Please see the release process

documentation for more context.

It respects many of the same environment variable flags as guix-build, with 2

crucial differences:

- Since only Windows and macOS build outputs require codesigning, the

HOSTSenvironment variable will have a sane default value ofx86_64-w64-mingw32 x86_64-apple-darwin arm64-apple-darwininstead of all the platforms. - The

guix-codesigncommand requires aDETACHED_SIGS_REPOflag.-

DETACHED_SIGS_REPO

Set the directory where detached codesignatures can be found for the current Bitcoin Core version being built.

REQUIRED environment variable

-

An invocation with all default options would look like:

env DETACHED_SIGS_REPO=<path/to/bitcoin-detached-sigs> ./contrib/guix/guix-codesign

Cleaning intermediate work directories

By default, guix-build leaves all intermediate files or "work directories"

(e.g. depends/work, guix-build-*/distsrc-*) intact at the end of a build so

that they are available to the user (to aid in debugging, etc.). However, these

directories usually take up a large amount of disk space. Therefore, a

guix-clean convenience script is provided which cleans the current git

worktree to save disk space:

./contrib/guix/guix-clean

Attesting to build outputs

Much like how Gitian build outputs are attested to in a gitian.sigs

repository, Guix build outputs are attested to in the guix.sigs

repository.

After you've cloned the guix.sigs repository, to attest to the current

worktree's commit/tag:

env GUIX_SIGS_REPO=<path/to/guix.sigs> SIGNER=<gpg-key-name> ./contrib/guix/guix-attest

See ./contrib/guix/guix-attest --help for more information on the various ways

guix-attest can be invoked.

Verifying build output attestations

After at least one other signer has uploaded their signatures to the guix.sigs

repository:

git -C <path/to/guix.sigs> pull

env GUIX_SIGS_REPO=<path/to/guix.sigs> ./contrib/guix/guix-verify

Common guix-build invocation patterns and examples

Keeping caches and SDKs outside of the worktree

If you perform a lot of builds and have a bunch of worktrees, you may find it

more efficient to keep the depends tree's download cache, build cache, and SDKs

outside of the worktrees to avoid duplicate downloads and unnecessary builds. To

help with this situation, the guix-build script honours the SOURCES_PATH,

BASE_CACHE, and SDK_PATH environment variables and will pass them on to the

depends tree so that you can do something like:

env SOURCES_PATH="$HOME/depends-SOURCES_PATH" BASE_CACHE="$HOME/depends-BASE_CACHE" SDK_PATH="$HOME/macOS-SDKs" ./contrib/guix/guix-build

Note that the paths that these environment variables point to must be directories, and NOT symlinks to directories.

See the recognized environment variables section for more details.

Building a subset of platform triples

Sometimes you only want to build a subset of the supported platform triples, in

which case you can override the default list by setting the space-separated

HOSTS environment variable:

env HOSTS='x86_64-w64-mingw32 x86_64-apple-darwin' ./contrib/guix/guix-build

See the recognized environment variables section for more details.

Controlling the number of threads used by guix build commands

Depending on your system's RAM capacity, you may want to decrease the number of threads used to decrease RAM usage or vice versa.

By default, the scripts under ./contrib/guix will invoke all guix build

commands with --cores="$JOBS". Note that $JOBS defaults to $(nproc) if not

specified. However, astute manual readers will also notice that guix build

commands also accept a --max-jobs= flag (which defaults to 1 if unspecified).

Here is the difference between --cores= and --max-jobs=:

Note: When I say "derivation," think "package"

--cores=

- controls the number of CPU cores to build each derivation. This is the value

passed to

make's--jobs=flag.

--max-jobs=

- controls how many derivations can be built in parallel

- defaults to 1

Therefore, the default is for guix build commands to build one derivation at a

time, utilizing $JOBS threads.

Specifying the $JOBS environment variable will only modify --cores=, but you

can also modify the value for --max-jobs= by specifying

$ADDITIONAL_GUIX_COMMON_FLAGS. For example, if you have a LOT of memory, you

may want to set:

export ADDITIONAL_GUIX_COMMON_FLAGS='--max-jobs=8'

Which allows for a maximum of 8 derivations to be built at the same time, each

utilizing $JOBS threads.

Or, if you'd like to avoid spurious build failures caused by issues with parallelism within a single package, but would still like to build multiple packages when the dependency graph allows for it, you may want to try:

export JOBS=1 ADDITIONAL_GUIX_COMMON_FLAGS='--max-jobs=8'

See the recognized environment variables section for more details.

Recognized environment variables

-

HOSTS

Override the space-separated list of platform triples for which to perform a bootstrappable build.

(defaults to "x86_64-linux-gnu arm-linux-gnueabihf aarch64-linux-gnu riscv64-linux-gnu powerpc64-linux-gnu powerpc64le-linux-gnu x86_64-w64-mingw32 x86_64-apple-darwin arm64-apple-darwin")

-

SOURCES_PATH

Set the depends tree download cache for sources. This is passed through to the depends tree. Setting this to the same directory across multiple builds of the depends tree can eliminate unnecessary redownloading of package sources.

The path that this environment variable points to must be a directory, and NOT a symlink to a directory.

-

BASE_CACHE

Set the depends tree cache for built packages. This is passed through to the depends tree. Setting this to the same directory across multiple builds of the depends tree can eliminate unnecessary building of packages.

The path that this environment variable points to must be a directory, and NOT a symlink to a directory.

-

SDK_PATH

Set the path where extracted SDKs can be found. This is passed through to the depends tree. Note that this is should be set to the parent directory of the actual SDK (e.g.

SDK_PATH=$HOME/Downloads/macOS-SDKsinstead of$HOME/Downloads/macOS-SDKs/Xcode-12.2-12B45b-extracted-SDK-with-libcxx-headers).The path that this environment variable points to must be a directory, and NOT a symlink to a directory.

-

JOBS

Override the number of jobs to run simultaneously, you might want to do so on a memory-limited machine. This may be passed to:

guixbuild commands as inguix shell --cores="$JOBS"makeas inmake --jobs="$JOBS"cmakeas incmake --build build -j "$JOBS"xargsas inxargs -P"$JOBS"

See here for more details.

(defaults to the value of

nprocoutside the container) -

SOURCE_DATE_EPOCH

Override the reference UNIX timestamp used for bit-for-bit reproducibility, the variable name conforms to standard.

(defaults to the output of

$(git log --format=%at -1)) -

V

If non-empty, will pass

V=1to allmakeinvocations, makingmakeoutput verbose.Note that any given value is ignored. The variable is only checked for emptiness. More concretely, this means that

V=(settingVto the empty string) is interpreted the same way as not settingVat all, and thatV=0has the same effect asV=1. -

SUBSTITUTE_URLS

A whitespace-delimited list of URLs from which to download pre-built packages. A URL is only used if its signing key is authorized (refer to the substitute servers section for more details).

-

ADDITIONAL_GUIX_COMMON_FLAGS

Additional flags to be passed to all

guixcommands. -

ADDITIONAL_GUIX_TIMEMACHINE_FLAGS

Additional flags to be passed to

guix time-machine. -

ADDITIONAL_GUIX_ENVIRONMENT_FLAGS

Additional flags to be passed to the invocation of

guix shellinsideguix time-machine.

Choosing your security model

No matter how you installed Guix, you need to decide on your security model for building packages with Guix.

Guix allows us to achieve better binary security by using our CPU time to build everything from scratch. However, it doesn't sacrifice user choice in pursuit of this: users can decide whether or not to use substitutes (pre-built packages).

Option 1: Building with substitutes

Step 1: Authorize the signing keys

Depending on the installation procedure you followed, you may have already authorized the Guix build farm key. In particular, the official shell installer script asks you if you want the key installed, and the debian distribution package authorized the key during installation.

You can check the current list of authorized keys at /etc/guix/acl.

At the time of writing, a /etc/guix/acl with just the Guix build farm key

authorized looks something like:

(acl

(entry

(public-key

(ecc

(curve Ed25519)

(q #8D156F295D24B0D9A86FA5741A840FF2D24F60F7B6C4134814AD55625971B394#)

)

)

(tag

(guix import)

)

)

)

If you've determined that the official Guix build farm key hasn't been authorized, and you would like to authorize it, run the following as root:

guix archive --authorize < /var/guix/profiles/per-user/root/current-guix/share/guix/ci.guix.gnu.org.pub

If

/var/guix/profiles/per-user/root/current-guix/share/guix/ci.guix.gnu.org.pub

doesn't exist, try:

guix archive --authorize < <PREFIX>/share/guix/ci.guix.gnu.org.pub

Where <PREFIX> is likely:

/usrif you installed from a distribution package/usr/localif you installed Guix from source and didn't supply any prefix-modifying flags to Guix's./configure

For dongcarl's substitute server at https://guix.carldong.io, run as root:

wget -qO- 'https://guix.carldong.io/signing-key.pub' | guix archive --authorize

Removing authorized keys

To remove previously authorized keys, simply edit /etc/guix/acl and remove the

(entry (public-key ...)) entry.

Step 2: Specify the substitute servers

Once its key is authorized, the official Guix build farm at

https://ci.guix.gnu.org is automatically used unless the --no-substitutes flag

is supplied. This default list of substitute servers is overridable both on a

guix-daemon level and when you invoke guix commands. See examples below for

the various ways of adding dongcarl's substitute server after having authorized

his signing key.

Change the default list of substitute servers by starting guix-daemon with

the --substitute-urls option (you will likely need to edit your init script):

guix-daemon <cmd> --substitute-urls='https://guix.carldong.io https://ci.guix.gnu.org'

Override the default list of substitute servers by passing the

--substitute-urls option for invocations of guix commands:

guix <cmd> --substitute-urls='https://guix.carldong.io https://ci.guix.gnu.org'

For scripts under ./contrib/guix, set the SUBSTITUTE_URLS environment

variable:

export SUBSTITUTE_URLS='https://guix.carldong.io https://ci.guix.gnu.org'

Option 2: Disabling substitutes on an ad-hoc basis

If you prefer not to use any substitutes, make sure to supply --no-substitutes

like in the following snippet. The first build will take a while, but the

resulting packages will be cached for future builds.

For direct invocations of guix:

guix <cmd> --no-substitutes

For the scripts under ./contrib/guix/:

export ADDITIONAL_GUIX_COMMON_FLAGS='--no-substitutes'

Option 3: Disabling substitutes by default

guix-daemon accepts a --no-substitutes flag, which will make sure that,

unless otherwise overridden by a command line invocation, no substitutes will be

used.

If you start guix-daemon using an init script, you can edit said script to

supply this flag.

Guix Installation and Setup

This only needs to be done once per machine. If you have already completed the installation and setup, please proceed to perform a build.

Otherwise, you may choose from one of the following options to install Guix:

- Using the official shell installer script ⤓ skip to section

- Maintained by Guix developers

- Easiest (automatically performs most setup)

- Works on nearly all Linux distributions

- Only installs latest release

- Binary installation only, requires high level of trust

- Note: The script needs to be run as root, so it should be inspected before it's run

- Using the official binary tarball ⤓ skip to section

- Maintained by Guix developers

- Normal difficulty (full manual setup required)

- Works on nearly all Linux distributions

- Installs any release

- Binary installation only, requires high level of trust

- Using fanquake's Docker image ↗︎ external instructions

- Maintained by fanquake

- Easy (automatically performs some setup)

- Works wherever Docker images work

- Installs any release

- Binary installation only, requires high level of trust

- Using a distribution-maintained package ⤓ skip to section

- Maintained by distribution's Guix package maintainer

- Normal difficulty (manual setup required)

- Works only on distributions with Guix packaged, see: https://repology.org/project/guix/versions

- Installs a release decided on by package maintainer

- Source or binary installation depending on the distribution

- Building from source ⤓ skip to section

- Maintained by you

- Hard, but rewarding

- Can be made to work on most Linux distributions

- Installs any commit (more granular)

- Source installation, requires lower level of trust

Options 1 and 2: Using the official shell installer script or binary tarball

The installation instructions for both the official shell installer script and the binary tarballs can be found in the GNU Guix Manual's Binary Installation section.

Note that running through the binary tarball installation steps is largely equivalent to manually performing what the shell installer script does.

Note that at the time of writing (July 5th, 2021), the shell installer script

automatically creates an /etc/profile.d entry which the binary tarball

installation instructions do not ask you to create. However, you will likely

need this entry for better desktop integration. Please see this

section for instructions on how to add a

/etc/profile.d/guix.sh entry.

Regardless of which installation option you chose, the changes to

/etc/profile.d will not take effect until the next shell or desktop session,

so you should log out and log back in.

Option 3: Using fanquake's Docker image

Please refer to fanquake's instructions here.

Option 4: Using a distribution-maintained package

Note that this section is based on the distro packaging situation at the time of writing (July 2021). Guix is expected to be more widely packaged over time. For an up-to-date view on Guix's package status/version across distros, please see: https://repology.org/project/guix/versions

Debian / Ubuntu

Guix is available as a distribution package in Debian and Ubuntu .

To install:

sudo apt install guix

Arch Linux

Guix is available in the AUR as

guix, please follow the

installation instructions in the Arch Linux Wiki (live

link,

2021/03/30

permalink)

to install Guix.

At the time of writing (2021/03/30), the check phase will fail if the path to

guix's build directory is longer than 36 characters due to an anachronistic

character limit on the shebang line. Since the check phase happens after the

build phase, which may take quite a long time, it is recommended that users

either:

- Skip the

checkphase- For

makepkg:makepkg --nocheck ... - For

yay:yay --mflags="--nocheck" ... - For

paru:paru --nocheck ...

- For

- Or, check their build directory's length beforehand

- For those building with

makepkg:pwd | wc -c

- For those building with

Option 5: Building from source

Building Guix from source is a rather involved process but a rewarding one for those looking to minimize trust and maximize customizability (e.g. building a particular commit of Guix). Previous experience with using autotools-style build systems to build packages from source will be helpful. hic sunt dracones.

I strongly urge you to at least skim through the entire section once before you start issuing commands, as it will save you a lot of unnecessary pain and anguish.

Installing common build tools

There are a few basic build tools that are required for most things we'll build, so let's install them now:

Text transformation/i18n:

autopoint(sometimes packaged ingettext)help2manpo4atexinfo

Build system tools:

g++w/ C++11 supportlibtoolautoconfautomakepkg-config(sometimes packaged aspkgconf)makecmake

Miscellaneous:

gitgnupgpython3

Building and Installing Guix's dependencies

In order to build Guix itself from source, we need to first make sure that the necessary dependencies are installed and discoverable. The most up-to-date list of Guix's dependencies is kept in the "Requirements" section of the Guix Reference Manual.

Depending on your distribution, most or all of these dependencies may already be packaged and installable without manually building and installing.

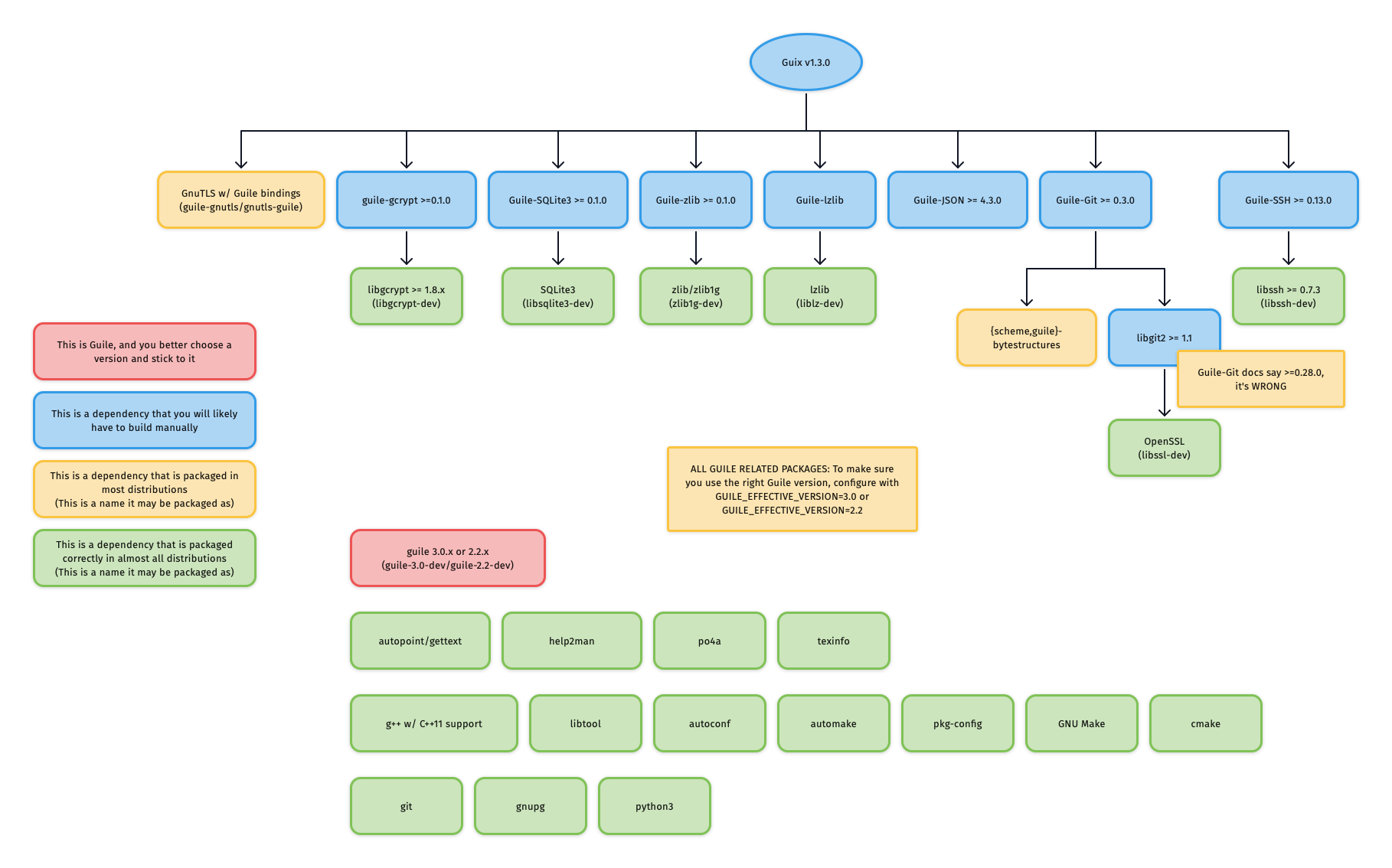

For reference, the graphic below outlines Guix v1.3.0's dependency graph:

If you do not care about building each dependency from source, and Guix is already packaged for your distribution, you can easily install only the build dependencies of Guix. For example, to enable deb-src and install the Guix build dependencies on Ubuntu/Debian:

sed -i 's|# deb-src|deb-src|g' /etc/apt/sources.list

apt update

apt-get build-dep -y guix

If this succeeded, you can likely skip to section "Building and Installing Guix itself".

Guile

Corner case: Multiple versions of Guile on one system

It is recommended to only install the required version of Guile, so that build systems do not get confused about which Guile to use.

However, if you insist on having more versions of Guile installed on

your system, then you need to consistently specify

GUILE_EFFECTIVE_VERSION=3.0 to all

./configure invocations for Guix and its dependencies.

Installing Guile

If your distribution splits packages into -dev-suffixed and

non--dev-suffixed sub-packages (as is the case for Debian-derived

distributions), please make sure to install both. For example, to install Guile

v3.0 on Debian/Ubuntu:

apt install guile-3.0 guile-3.0-dev

Mixing distribution packages and source-built packages

At the time of writing, most distributions have some of Guix's dependencies packaged, but not all. This means that you may want to install the distribution package for some dependencies, and manually build-from-source for others.

Distribution packages usually install to /usr, which is different from the

default ./configure prefix of source-built packages: /usr/local.

This means that if you mix-and-match distribution packages and source-built

packages and do not specify exactly --prefix=/usr to ./configure for

source-built packages, you will need to augment the GUILE_LOAD_PATH and

GUILE_LOAD_COMPILED_PATH environment variables so that Guile will look

under the right prefix and find your source-built packages.

For example, if you are using Guile v3.0, and have Guile packages in the

/usr/local prefix, either add the following lines to your .profile or

.bash_profile so that the environment variable is properly set for all future

shell logins, or paste the lines into a POSIX-style shell to temporarily modify

the environment variables of your current shell session.

# Help Guile v3.0.x find packages in /usr/local

export GUILE_LOAD_PATH="/usr/local/share/guile/site/3.0${GUILE_LOAD_PATH:+:}$GUILE_LOAD_PATH"

export GUILE_LOAD_COMPILED_PATH="/usr/local/lib/guile/3.0/site-ccache${GUILE_LOAD_COMPILED_PATH:+:}$GUILE_COMPILED_LOAD_PATH"

Note that these environment variables are used to check for packages during

./configure, so they should be set as soon as possible should you want to use

a prefix other than /usr.

Building and installing source-built packages

IMPORTANT: A few dependencies have non-obvious quirks/errata which are documented in the sub-sections immediately below. Please read these sections before proceeding to build and install these packages.

Although you should always refer to the README or INSTALL files for the most

accurate information, most of these dependencies use autoconf-style build

systems (check if there's a configure.ac file), and will likely do the right

thing with the following:

Clone the repository and check out the latest release:

git clone <git-repo-of-dependency>/<dependency>.git

cd <dependency>

git tag -l # check for the latest release

git checkout <latest-release>

For autoconf-based build systems (if ./autogen.sh or configure.ac exists at

the root of the repository):

./autogen.sh || autoreconf -vfi

./configure --prefix=<prefix>

make

sudo make install

For CMake-based build systems (if CMakeLists.txt exists at the root of the

repository):

mkdir build && cd build

cmake .. -DCMAKE_INSTALL_PREFIX=<prefix>

sudo cmake --build . --target install

If you choose not to specify exactly --prefix=/usr to ./configure, please

make sure you've carefully read the [previous section] on mixing distribution

packages and source-built packages.

Binding packages require -dev-suffixed packages

Relevant for:

- Everyone

When building bindings, the -dev-suffixed version of the original package

needs to be installed. For example, building Guile-zlib on Debian-derived

distributions requires that zlib1g-dev is installed.

When using bindings, the -dev-suffixed version of the original package still

needs to be installed. This is particularly problematic when distribution

packages are mispackaged like guile-sqlite3 is in Ubuntu Focal such that

installing guile-sqlite3 does not automatically install libsqlite3-dev as a

dependency.

Below is a list of relevant Guile bindings and their corresponding -dev

packages in Debian at the time of writing.

| Guile binding package | -dev Debian package |

|---|---|

| guile-gcrypt | libgcrypt-dev |

| guile-git | libgit2-dev |

| guile-gnutls | (none) |

| guile-json | (none) |

| guile-lzlib | liblz-dev |

| guile-ssh | libssh-dev |

| guile-sqlite3 | libsqlite3-dev |

| guile-zlib | zlib1g-dev |

guile-git actually depends on libgit2 >= 1.1

Relevant for:

- Those building

guile-gitfrom source againstlibgit2 < 1.1 - Those installing

guile-gitfrom their distribution whereguile-gitis built againstlibgit2 < 1.1

As of v0.5.2, guile-git claims to only require libgit2 >= 0.28.0, however,

it actually requires libgit2 >= 1.1, otherwise, it will be confused by a

reference of origin/keyring: instead of interpreting the reference as "the

'keyring' branch of the 'origin' remote", the reference is interpreted as "the

branch literally named 'origin/keyring'"

This is especially notable because Ubuntu Focal packages libgit2 v0.28.4, and

guile-git is built against it.

Should you be in this situation, you need to build both libgit2 v1.1.x and

guile-git from source.

Source: https://logs.guix.gnu.org/guix/2020-11-12.log#232527

Building and Installing Guix itself

Start by cloning Guix:

git clone https://git.savannah.gnu.org/git/guix.git

cd guix

You will likely want to build the latest release.

At the time of writing (November 2023), the latest release was v1.4.0.

git branch -a -l 'origin/version-*' # check for the latest release

git checkout <latest-release>

Bootstrap the build system:

./bootstrap

Configure with the recommended --localstatedir flag:

./configure --localstatedir=/var

Note: If you intend to hack on Guix in the future, you will need to supply the

same --localstatedir= flag for all future Guix ./configure invocations. See

the last paragraph of this

section for more

details.

Build Guix (this will take a while):

make -j$(nproc)

Install Guix:

sudo make install

Post-"build from source" Setup

Creating and starting a guix-daemon-original service with a fixed argv[0]

At this point, guix will be installed to ${bindir}, which is likely

/usr/local/bin if you did not override directory variables at

./configure-time. More information on standard Automake directory variables

can be found

here.

However, the Guix init scripts and service configurations for Upstart, systemd,

SysV, and OpenRC are installed (in ${libdir}) to launch

${localstatedir}/guix/profiles/per-user/root/current-guix/bin/guix-daemon,

which does not yet exist, and will only exist after root performs their first

guix pull.

We need to create a -original version of these init scripts that's pointed to

the binaries we just built and make install'ed in ${bindir} (normally,

/usr/local/bin).

Example for systemd, run as root:

# Create guix-daemon-original.service by modifying guix-daemon.service

libdir=# set according to your PREFIX (default is /usr/local/lib)

bindir="$(dirname $(command -v guix-daemon))"

sed -E -e "s|/\S*/guix/profiles/per-user/root/current-guix/bin/guix-daemon|${bindir}/guix-daemon|" "${libdir}"/systemd/system/guix-daemon.service > /etc/systemd/system/guix-daemon-original.service

chmod 664 /etc/systemd/system/guix-daemon-original.service

# Make systemd recognize the new service

systemctl daemon-reload

# Make sure that the non-working guix-daemon.service is stopped and disabled

systemctl stop guix-daemon

systemctl disable guix-daemon

# Make sure that the working guix-daemon-original.service is started and enabled

systemctl enable guix-daemon-original

systemctl start guix-daemon-original

Creating guix-daemon users / groups

Please see the relevant section in the Guix Reference Manual for more details.

Optional setup

At this point, you are set up to use Guix to build Bitcoin Core. However, if you want to polish your setup a bit and make it "what Guix intended", then read the next few subsections.

Add an /etc/profile.d entry

This section definitely does not apply to you if you installed Guix using:

- The shell installer script

- fanquake's Docker image

- Debian's

guixpackage

Background

Although Guix knows how to update itself and its packages, it does so in a

non-invasive way (it does not modify /usr/local/bin/guix).

Instead, it does the following:

-

After a

guix pull, it updates/var/guix/profiles/per-user/$USER/current-guix, and creates a symlink targeting this directory at$HOME/.config/guix/current -

After a

guix install, it updates/var/guix/profiles/per-user/$USER/guix-profile, and creates a symlink targeting this directory at$HOME/.guix-profile

Therefore, in order for these operations to affect your shell/desktop sessions

(and for the principle of least astonishment to hold), their corresponding

directories have to be added to well-known environment variables like $PATH,

$INFOPATH, $XDG_DATA_DIRS, etc.

In other words, if $HOME/.config/guix/current/bin does not exist in your

$PATH, a guix pull will have no effect on what guix you are using. Same

goes for $HOME/.guix-profile/bin, guix install, and installed packages.

Helpfully, after a guix pull or guix install, a message will be printed like

so:

hint: Consider setting the necessary environment variables by running:

GUIX_PROFILE="$HOME/.guix-profile"

. "$GUIX_PROFILE/etc/profile"

Alternately, see `guix package --search-paths -p "$HOME/.guix-profile"'.

However, this is somewhat tedious to do for both guix pull and guix install

for each user on the system that wants to properly use guix. I recommend that

you instead add an entry to /etc/profile.d instead. This is done by default

when installing the Debian package later than 1.2.0-4 and when using the shell

script installer.

Instructions

Create /etc/profile.d/guix.sh with the following content:

# _GUIX_PROFILE: `guix pull` profile

_GUIX_PROFILE="$HOME/.config/guix/current"

if [ -L $_GUIX_PROFILE ]; then

export PATH="$_GUIX_PROFILE/bin${PATH:+:}$PATH"

# Export INFOPATH so that the updated info pages can be found

# and read by both /usr/bin/info and/or $GUIX_PROFILE/bin/info

# When INFOPATH is unset, add a trailing colon so that Emacs

# searches 'Info-default-directory-list'.

export INFOPATH="$_GUIX_PROFILE/share/info:$INFOPATH"

fi

# GUIX_PROFILE: User's default profile

GUIX_PROFILE="$HOME/.guix-profile"

[ -L $GUIX_PROFILE ] || return

GUIX_LOCPATH="$GUIX_PROFILE/lib/locale"

export GUIX_PROFILE GUIX_LOCPATH

[ -f "$GUIX_PROFILE/etc/profile" ] && . "$GUIX_PROFILE/etc/profile"

# set XDG_DATA_DIRS to include Guix installations

export XDG_DATA_DIRS="$GUIX_PROFILE/share:${XDG_DATA_DIRS:-/usr/local/share/:/usr/share/}"

Please note that this will not take effect until the next shell or desktop session (log out and log back in).

guix pull as root

Before you do this, you need to read the section on choosing your security

model and adjust guix and guix-daemon flags according to

your choice, as invoking guix pull may pull substitutes from substitute

servers (which you may not want).

As mentioned in a previous section, Guix expects

${localstatedir}/guix/profiles/per-user/root/current-guix to be populated with

root's Guix profile, guix pull-ed and built by some former version of Guix.

However, this is not the case when we build from source. Therefore, we need to

perform a guix pull as root:

sudo --login guix pull --branch=version-<latest-release-version>

# or

sudo --login guix pull --commit=<particular-commit>

guix pull is quite a long process (especially if you're using

--no-substitutes). If you encounter build problems, please refer to the

troubleshooting section.

Note that running a bare guix pull with no commit or branch specified will

pull the latest commit on Guix's master branch, which is likely fine, but not

recommended.

If you installed Guix from source, you may get an error like the following:

error: while creating symlink '/root/.config/guix/current' No such file or directory

To resolve this, simply:

sudo mkdir -p /root/.config/guix

Then try the guix pull command again.

After the guix pull finishes successfully,

${localstatedir}/guix/profiles/per-user/root/current-guix should be populated.

Using the newly-pulled guix by restarting the daemon

Depending on how you installed Guix, you should now make sure that your init

scripts and service configurations point to the newly-pulled guix-daemon.

If you built Guix from source

If you followed the instructions for fixing argv[0], you can now do the following:

systemctl stop guix-daemon-original

systemctl disable guix-daemon-original

systemctl enable guix-daemon

systemctl start guix-daemon

Remember to set --no-substitutes in $libdir/systemd/system/guix-daemon.service and other customizations if you used them for guix-daemon-original.service.

If you installed Guix via the Debian/Ubuntu distribution packages

You will need to create a guix-daemon-latest service which points to the new

guix rather than a pinned one.

# Create guix-daemon-latest.service by modifying guix-daemon.service

sed -E -e "s|/usr/bin/guix-daemon|/var/guix/profiles/per-user/root/current-guix/bin/guix-daemon|" /etc/systemd/system/guix-daemon.service > /lib/systemd/system/guix-daemon-latest.service

chmod 664 /lib/systemd/system/guix-daemon-latest.service

# Make systemd recognize the new service

systemctl daemon-reload

# Make sure that the old guix-daemon.service is stopped and disabled

systemctl stop guix-daemon

systemctl disable guix-daemon

# Make sure that the new guix-daemon-latest.service is started and enabled

systemctl enable guix-daemon-latest

systemctl start guix-daemon-latest

If you installed Guix via lantw44's Arch Linux AUR package

At the time of writing (July 5th, 2021) the systemd unit for "updated Guix" is

guix-daemon-latest.service, therefore, you should do the following:

systemctl stop guix-daemon

systemctl disable guix-daemon

systemctl enable guix-daemon-latest

systemctl start guix-daemon-latest

Otherwise...

Simply do:

systemctl restart guix-daemon

Checking everything

If you followed all the steps above to make your Guix setup "prim and proper," you can check that you did everything properly by running through this checklist.

-

/etc/profile.d/guix.shshould exist and be sourced at each shell login -

guix describeshould not printguix describe: error: failed to determine origin, but rather something like:Generation 38 Feb 22 2021 16:39:31 (current) guix f350df4 repository URL: https://git.savannah.gnu.org/git/guix.git branch: version-1.2.0 commit: f350df405fbcd5b9e27e6b6aa500da7f101f41e7 -

guix-daemonshould be running from${localstatedir}/guix/profiles/per-user/root/current-guix

Troubleshooting

Derivation failed to build

When you see a build failure like below:

building /gnu/store/...-foo-3.6.12.drv...

/ 'check' phasenote: keeping build directory `/tmp/guix-build-foo-3.6.12.drv-0'

builder for `/gnu/store/...-foo-3.6.12.drv' failed with exit code 1

build of /gnu/store/...-foo-3.6.12.drv failed

View build log at '/var/log/guix/drvs/../...-foo-3.6.12.drv.bz2'.

cannot build derivation `/gnu/store/...-qux-7.69.1.drv': 1 dependencies couldn't be built

cannot build derivation `/gnu/store/...-bar-3.16.5.drv': 1 dependencies couldn't be built

cannot build derivation `/gnu/store/...-baz-2.0.5.drv': 1 dependencies couldn't be built

guix time-machine: error: build of `/gnu/store/...-baz-2.0.5.drv' failed

It means that guix failed to build a package named foo, which was a

dependency of qux, bar, and baz. Importantly, note that the last "failed"

line is not necessarily the root cause, the first "failed" line is.

Most of the time, the build failure is due to a spurious test failure or the

package's build system/test suite breaking when running multi-threaded. To

rebuild just this derivation in a single-threaded fashion (please don't forget

to add other guix flags like --no-substitutes as appropriate):

$ guix build --cores=1 /gnu/store/...-foo-3.6.12.drv

If the single-threaded rebuild did not succeed, you may need to dig deeper.

You may view foo's build logs in less like so (please replace paths with the

path you see in the build failure output):

$ bzcat /var/log/guix/drvs/../...-foo-3.6.12.drv.bz2 | less

foo's build directory is also preserved and available at

/tmp/guix-build-foo-3.6.12.drv-0. However, if you fail to build foo multiple

times, it may be /tmp/...drv-1 or /tmp/...drv-2. Always consult the build

failure output for the most accurate, up-to-date information.

python(-minimal): [Errno 84] Invalid or incomplete multibyte or wide character

This error occurs when your $TMPDIR (default: /tmp) exists on a filesystem

which rejects characters not present in the UTF-8 character code set. An example

is ZFS with the utf8only=on option set.

More information: https://github.com/python/cpython/issues/81765

openssl-1.1.1l and openssl-1.1.1n

OpenSSL includes tests that will fail once some certificate has expired. The workarounds from the GnuTLS section immediately below can be used.

For openssl-1.1.1l use 2022-05-01 as the date.

GnuTLS: test-suite FAIL: status-request-revoked

The derivation is likely identified by: /gnu/store/vhphki5sg9xkdhh2pbc8gi6vhpfzryf0-gnutls-3.6.12.drv

This unfortunate error is most common for non-substitute builders who installed Guix v1.2.0. The problem stems from the fact that one of GnuTLS's tests uses a hardcoded certificate which expired on 2020-10-24.

What's more unfortunate is that this GnuTLS derivation is somewhat special in

Guix's dependency graph and is not affected by the package transformation flags

like --without-tests=.

The easiest solution for those encountering this problem is to install a newer version of Guix. However, there are ways to work around this issue:

Workaround 1: Using substitutes for this single derivation

If you've authorized the official Guix build farm's key (more info here), then you can use substitutes just for this single derivation by invoking the following:

guix build --substitute-urls="https://ci.guix.gnu.org" /gnu/store/vhphki5sg9xkdhh2pbc8gi6vhpfzryf0-gnutls-3.6.12.drv

See this section for instructions on how to remove authorized keys if you don't want to keep the build farm's key authorized.

Workaround 2: Temporarily setting the system clock back

This workaround was described here.

Basically:

- Turn off NTP

- Set system time to 2020-10-01

- guix build --no-substitutes /gnu/store/vhphki5sg9xkdhh2pbc8gi6vhpfzryf0-gnutls-3.6.12.drv

- Set system time back to accurate current time

- Turn NTP back on

For example,

sudo timedatectl set-ntp no

sudo date --set "01 oct 2020 15:00:00"

guix build /gnu/store/vhphki5sg9xkdhh2pbc8gi6vhpfzryf0-gnutls-3.6.12.drv

sudo timedatectl set-ntp yes

Workaround 3: Disable the tests in the Guix source code for this single derivation

If all of the above workarounds fail, you can also disable the tests phase of

the derivation via the arguments option, as described in the official

package

reference.

For example, to disable the openssl-1.1 check phase: